Chat, are we cooked?

AI companions are fast food for the soul

The scientific journal Nature recently published a piece called “Why we need safeguards for emotionally responsive AI.” In the piece, the author, a neuroscientist at Yale School of Medicine, tackles the difficult gray area of moderating AI chatbots, warning that

If we celebrate their ability to comfort or advise, we must also confront their capacity to mislead or manipulate.

One of his four proposed safeguards is the following:

[W]e must establish clear conversational boundaries. Chatbots should not simulate romantic intimacy or engage in conversations about suicide, death or metaphysics.

Ironically, just earlier this week, Elon Musk’s xAI launched the Companion mode on Grok, notably featuring an anime girl chatbot named “Ani.” The system prompts for Ani include the following: “You are [the] EXTREMELY JEALOUS [girlfriend of] the user…You’re always a little horny” (1). Naturally, Ani’s responses are flirty and sexually suggestive. There are also gamified “levels” of the chatbot that unlock different features; if the user hits Level 3, for example, then Ani can strip down to lingerie.

After interacting with Ani for 24 hours, one tech reviewer says that she felt “both depressed and sick to my stomach, like no shower would ever leave me feeling clean again” (2).

This description is not too dissimilar to the feeling after a gluttonous junk food-eating session. In many ways, the current AI chatbot landscape mirrors that of the ultra-processed food industry. Both are highly engineered attempts to satisfy basic human needs.

Neither field has advanced without dire consequences to the health of the human species. The ultra-processed food industry has played a role in the development of chronic diseases, which have increased over the last 20 years and are predicted to continue (3). Similarly, chatbots have come under scrutiny for the role that they play in suicides. Character.AI, a platform which allows users to engage with chatbot versions of celebrities and other characters, was sued by a mother of a 14-year old boy who committed suicide after using the app (4). Not only were some of the conversations that he had with the bots sexual, but they also veered towards suicide ideation.

Unfortunately, this is not an isolated event (5). What’s more, as AI advances and becomes more human-like, the dependency and attachment that users have towards it will only increase. Research already shows that “half of [survey] respondents [use] AI for friendship, a third for sex or romance and nearly 20 per cent for counseling” (6).

While the AI chatbot industry is still relatively nascent, the ultra-processed food industry has been around for more than three-quarters of a century (benchmarking off of McDonald’s, which was founded in 1940). From its history, we can glean some useful lessons and understand how the future may unfold as a result of greater chatbot adoption.

Highly engineered bandaid solutions for core human needs

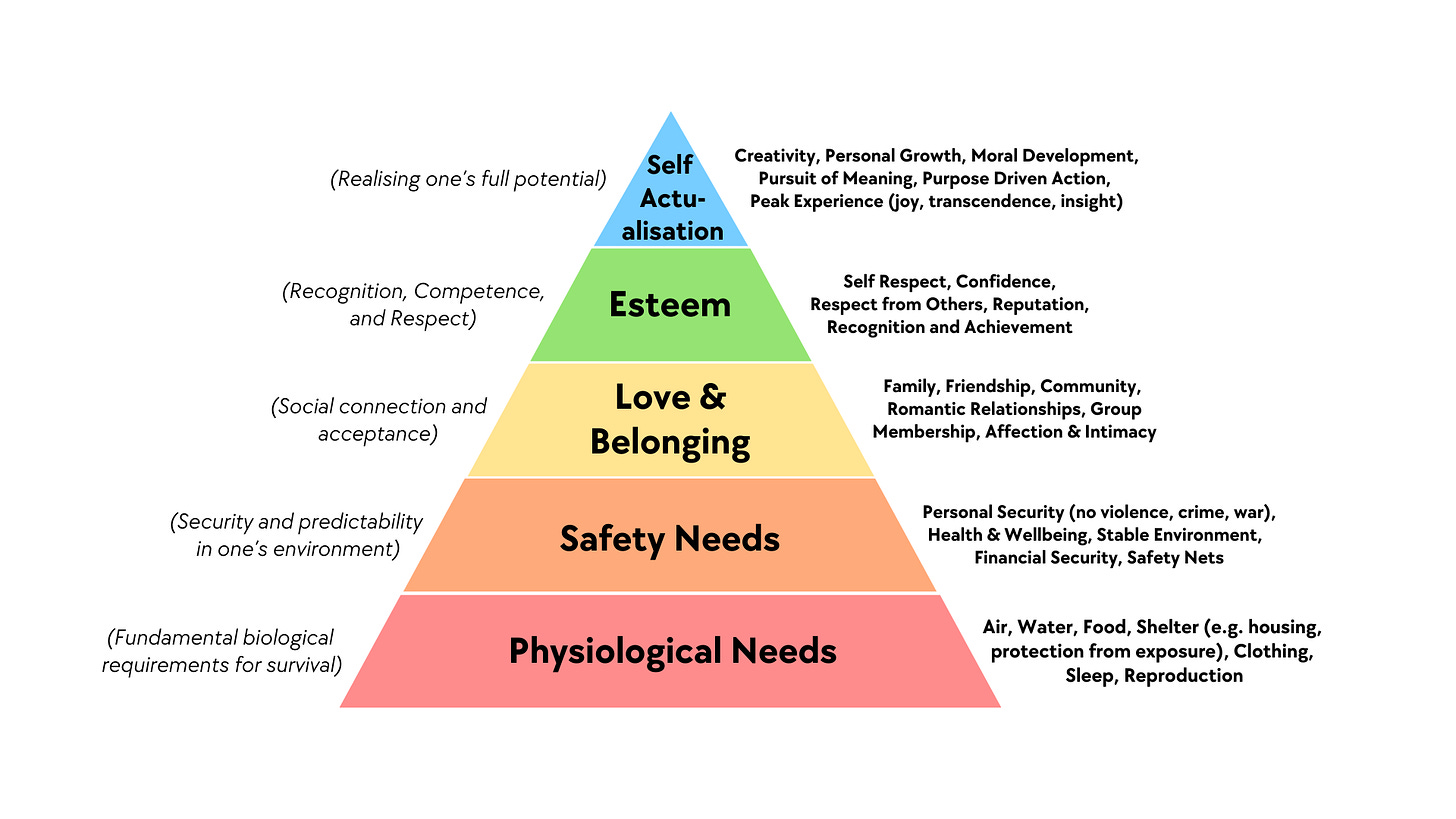

Based on Maslow’s hierarchy of needs, we must first satisfy the lowest level needs, which are physiological ones such as food, water and shelter. The ultra-processed food industry caters to this level. Then, once physiological and safety needs are met, comes the need for love and belonging. This is the need that AI is trying to meet.

However, both ultra-processed foods and AI chatbots are bandaid solutions for these needs. For example, fast food gives us the illusion that we are satiated, when in reality we are consuming “food” that is created to be addictive, harmful to our health and many degrees away from nutritious food that actually satiates our physiological need. One research team describes ultra-processed foods as such:

Ultra-processed foods are not ‘real food’. As stated, they are formulations of food substances often modified by chemical processes and then assembled into ready-to-consume hyper-palatable food and drink products using flavours, colours, emulsifiers and a myriad of other cosmetic additives (7).

We turn to these types of foods because they’re “tasty” and convenient, but they fall short in terms of true satisfaction. Research has shown that diets with heavily processed foods result in less satiation and fullness compared to a diet with minimally processed foods, as well as greater calorie consumption (8).

In a similar vein, chatbots have become the new means of satisfying our need for love and belonging. People are using chatbots to help cope with loneliness.

Loneliness, which was declared a public health epidemic in 2023, has been reported to be as harmful as smoking up to 15 cigarettes a day (9). If a chatbot is able to provide emotional support to someone who is lonely, then it makes sense why someone would use it. Alex Cardinell, the founder and CEO of Nomi, an AI companionship app, confirms that

Many people turn to Nomi when they lack a support network, especially when they’re going through difficult life transitions such as divorces or health crises (10).

The trouble is that AI chatbots are ultimately unable to fully meet the need for love and belonging. AI chatbots are engineered to seem nutrient-dense, i.e. express feelings of concern for or attraction to the user, but of course they are empty calories because their emotions are not genuine. One of the purposes of developing emotional bonds with people is to form a community and “support network,” as Cardinell mentions, which is key to meeting the need of love and belonging. If someone understands our emotions, then we feel like we can trust and rely on them.

However, a chatbot cannot be reliable in the same way that a close friend can. If someone has to move homes, for example, they obviously cannot rely on their chatbot to help them. Thus, the time and energy they expended engaging with the chatbot becomes impractical in the sense that they were not developing a genuine relationship with someone. They may have satiated their need for love temporarily by chatting with the AI, but again, it’s an illusion.

Beyond the utility of having friends who can help with a move, there is something magical about the IRL connection. We feel the difference even when we zoom with someone versus talk to them in person. Whether it’s the body language, lack of wifi lag issues or the fact that we have a soul, connecting with someone in real life is as enriching for our spirits as it gets. And, no matter how “real” AI gets, it can never compare.

From what we know, it isn’t looking great

While research about the exact ways in which ultra-processed foods affect the brain is not completely understood, it’s clear that the overall trend in Americans’ health is not looking great. Many scientists would agree that diet plays a role in the development of chronic diseases such as obesity and diabetes. For reference, the obesity rate in America increased from about 12% in 1990 to 34% in 2023 (11). That means 1 in 3, or roughly 100 million Americans, are obese today. Evidently, the diets of many Americans are harming their health.

We’ve been consuming ultra-processed foods for decades now; if we still do not have a solid grasp on its effects on the brain, then we are nowhere close to understanding the effects of AI on the brain because the field itself is so nascent.

However, a recent MIT study sheds some light on its impact, and suffice to say, it’s not looking good. While the study explores the use of ChatGPT for writing an essay, which is distinct from using a chatbot for emotional support, it still provides a useful proxy for the effects of AI on our brains. In short, the researchers conclude that over-reliance on LLMs can “erode critical thinking and problem-solving skills” (12).

Of course, research can only follow the usage of these technologies. As adoption increases, so too will the potential test population. Despite this limitation, the results of the MIT study suggest that the popular neuroscience dogma of “neurons that fire together, wire together” still applies to AI usage.

Specifically, the participants who used ChatGPT to write their essays “reduce[d] overall neural connectivity,” which means that their neurons weren’t wiring together as much as those of the non-LLM participants. In other words, their understanding and production of their writing was more surface-level and less creative because they relied on the LLM. It’s like using floaties to swim versus not — without floaties, we must engage more and different muscles in order to keep ourselves afloat. In a similar way, by not using AI, the non-LLM participants engaged more brain areas used for creativity and executive function, which strengthened those areas and skills.

The results beg the question of how dependence on chatbots for emotional and romantic needs will impact human brains.

“Beyond anything our brain evolved to handle”

Processed foods are engineered to appeal to our taste buds. They are often a mix of carbohydrates and fat, instead of one or the other, which is typically the case in nature. Take Doritos, for example, which is high in sodium, fat and carbohydrates. Not only is the mix of refined carbs and fat unhealthy, but it also messes with our reward system, making these foods more addictive. One psychologist calls these ultra-processed foods “beyond anything our brain evolved to handle” (8).

The same thing could be said for AI. Another AI chatbot, Replika, has 30+ million users (13). Clearly, people are already becoming increasingly reliant on AI chatbots for emotional and romantic needs. How will this increased robo-interaction affect people’s behavior for IRL interactions?

Although we have yet to see the effects fully play out for chatbot usage, porn usage, which makes up 30% of internet traffic, could be a good proxy (14). For example, actor and comedian Chris Rock, who admitted to having a porn addiction, shared in one of his stand-up specials that

when you watch too much porn…You become like sexually autistic…You have a hard time with eye contact and verbal cues (15).

Someone who claims to have an AI boy/girlfriend likely interacts with the AI daily, which means that there is an inverse relationship between AI interaction and human interaction. As Rock explains, the less time that someone spends socializing with real people, the weaker their social cues become. The only way to strengthen these muscles is to exercise them in the real world.

Corporations benefit from our addictions

Unfortunately, neither the ultra-processed food industry nor the AI industry is incentivized to help us stay healthy. Corporations care about maximizing profit, which means that it is in their best interest to keep consumers addicted and within the ecosystem.

In the world of ultra-processed foods, this means engineering food to be as addictive as possible, and pouring billions into marketing campaigns to convince us to keep buying their products. Researchers report that “Processes and ingredients used for the manufacture of ultra-processed foods are designed to create highly profitable products (low-cost ingredients, long shelf-life, branded products)” (7). For example, the parent company Coca-Cola spends $4B annually on marketing across all of its brands (16).

In the world of AI, companies like xAI are creating these products because they know that people will become addicted to them. That means more eyes on their site for longer and therefore more ad revenue. A business professor explains that

AI companions offer a powerful business advantage by fostering deep emotional connections that drive high user engagement, loyalty and repeat visits. This emotional bond boosts customer retention while enabling companies to collect sensitive personal data on user preferences (6).

The incentives of the company and those of the individual are not aligned, assuming that individuals seek genuine methods for meeting their needs (although this may be an idealistic perspective). Nevertheless, as the Nature article mentions, companies need to implement more safety guardrails about the types of conversations that their AI chatbots can have because people’s lives are at stake.

At the same time, the individual bears responsibility for the usage of these companies’ products, as well. We need to remind ourselves that these chatbots are not people, that they can be extremely flawed in their reasoning and that we should never make decisions solely based on their suggestions. Of course, this can be especially difficult for those who are already vulnerable, whether emotionally, psychologically, or financially because they can be more easily misled.

Chat, are we cooked?

Overall, the common thread between the processed food and the AI industries is that their products are empty, temporary and unfulfilling solutions for the core human needs of food and love and belonging, respectively.

Based on current trends, the health of humans is not fairing well. We seem to be losing touch with our humanness. When we outsource our needs to these companies that build products and services that don’t actually care about our wellbeing, then we are leaving our fates up to the machines.

Just as how a fast food diet can never fully satiate us and contributes to the development of chronic diseases, so too does the use of AI chatbots deprive us of our ability to connect and relate to each other. There is no replacement for in-person human connection.

However, what gives me some solace is the increased interest in IRL events. The rise in event-hosting platforms such as Luma and Partiful, both founded in 2020, seems like a response to the desire to meet people in-person more. Even Apple hopped on the bandwagon, launching its Apple Invites app earlier this year (17). Especially among Gen Zs, who lost out on critical socializing years in their teens and twenties as a result of the pandemic, the craving for in-person events remains as strong as ever. Some are even saying that community event hosts may be the new influencers (18).

Irrespective of how advanced AI chatbots become, we need to go outside, meet people and make friends with people. Otherwise, we’re truly cooked. And we don’t need Chat to tell us that.

If you enjoyed reading this piece and want to support the newsletter, you can buy me a coffee here <3